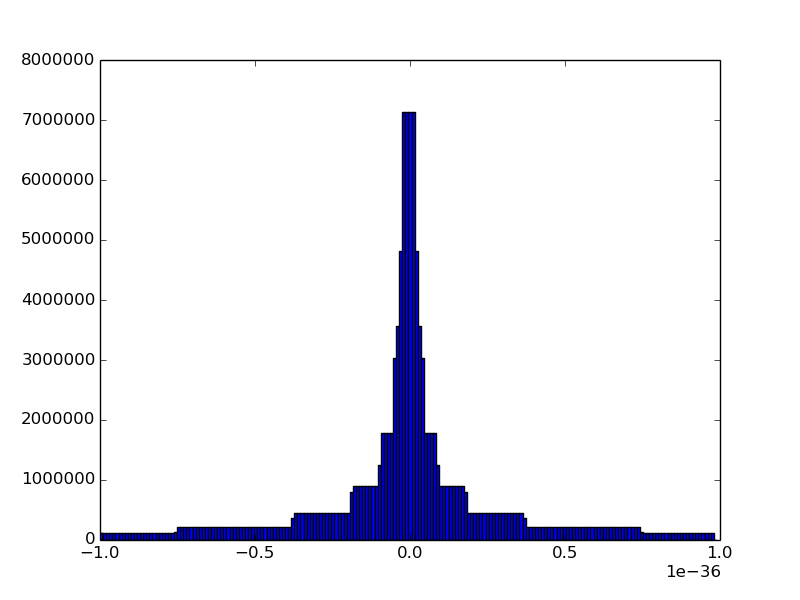

The spike at the heart of floats

IEEE 754 floating point numbers are in practically every programming language out there. Most people have come across or have been bitten by this:

0.1 + 0.2 != 0.3

This inequality happens in any language using these IEEE 754 floats.

While learning about machine learning--and noticing how often floats get cut

down to ranges like [0,1] or [-1,1]--I couldn't help but wonder just how many

bits of information were being stripped. So I decided to use the

float_next_after crate in Rust to

create histograms of how many unique float values were around zero.

For my CPUs sake I stuck to f32. I was pretty surprised by the histogram I

ended up with:

It turns out that a massive amount of the floating point values are values very close to zero. Look at the scale of x here! We're going from -1e-36 to 1e-36. Or to get a sense of scale:

-0.000000000000000000000000000000000001 to 0.000000000000000000000000000000000001